Auction-based Crowdsourcing

Last updated: May 12, 2011

Keywords: crowdsourcing, online communities, task markets, auctions, skill evolution

Introduction

Crowdsourcing has emerged as an important paradigm in human-problem solving techniques on the Web. The most prominent platform is currently Amazon Mechanical Turk. One application of crowdsourcing is to outsource certain tasks to the crowd that are difficult to implement as solutions solely based on software services. Another potential benefit of crowdsourcing is the on-demand allocation of a flexible workforce. Businesses may outsource certain tasks to the crowd based on temporary workload variations. A major challenge in crowdsourcing is to guarantee high-quality processing of tasks. We present a novel crowdsourcing marketplace that matches tasks to suitable workers based on auctions.

The main challenges addressed in this work relate to building and managing an automated crowd platform. It is not only of importance to find the matching resources and to provide the customer with satisfying quality, but also, to maintain a motivated base of crowd workers and provide stimulus for learning required skills. Only a recurring, satisfied crowd staff helps to keep the work’s quality and increase the work’s output. As any crowd, fluctuations must be compensated and a skill evolution model must support new and existing crowd workers in developing their capabilities and knowledge. Finally, the standard processes on such a platform should be automated and free from intervention to handle the vast amount of tasks. Atop, the model should increase the benefit of all participants.

The fundamental questions addressed by our approach are:

- Automated matching and auctions. For providing a beneficial distribution of the tasks to the available resources we organize auctions according to novel mechanisms.

- Stimulating skill evolution. In order to bootstrap new skills and unexperienced workers we provide skill evolution by integrating assessment tasks into our auction model.

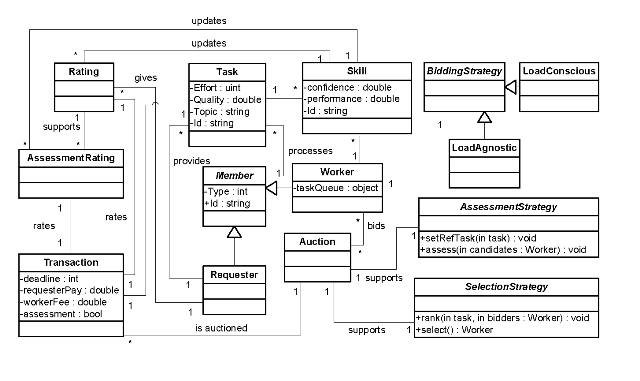

The figure in the following details the most important entities of our auction based crowdsourcing marketplace simulation environment and their dependencies.

The crowd’s uniquely identifiable Members are comprised of Workers and Requesters. The life-cycle of a Transaction instance begins once a requester decides to issue a Task to the platform. The Auction class is responsible for conducting auctions. Workers selected by a Selection Strategy may submit their bids for the announced auctions. Each worker has an individual BiddingStrategy. Once all bids are collected, the platform decides on the winner and assessment task using SelectionStrategy and AssessmentStrategy. Completed tasks may be rated by requesters as implemented in Rating. Depending on the auction strategy, the same task is assigned to a bunch of workers for assessment. However, only one task (the actually returned) may be rated by the worker. AssessmentRating is used for rating of assessment tasks. Finally, both types of rating update the skill profile of a worker.

Simulation Environment

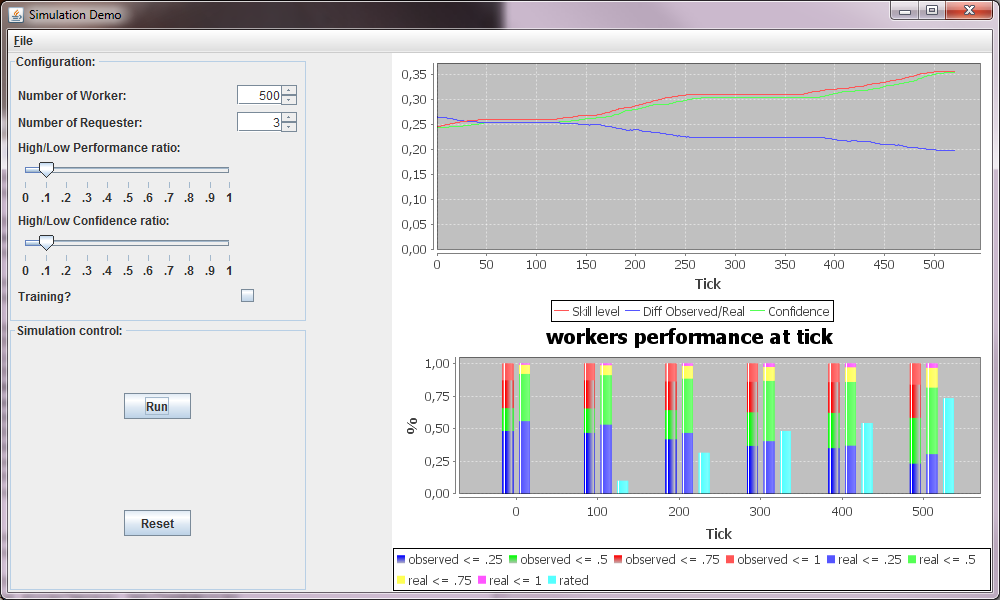

The screenshot of the Java-based simulation prototype is shown in the following figure.

People Publications

- Satzger B., Psaier H., Schall D., Dustdar S. Stimulating Skill Evolution in Market-based Crowdsourcing. 9th International Conference on Business Process Management (BPM'11), August 28th to September 2nd 2011, Clermont-Ferrand, France.